In an earlier post I discussed an architecture for defect tracking tools which would separate the concepts of symptoms and causes, thus allowing the tool to more closely resemble how problems manifest in real software and how engineering typically approach those problems. I mentioned the need for such a tool to link to a scientific database, or electronic lab notebook. This is necessary because the way we organize test data during the investigation of a macro problem is different then way we track those problems. I also mentioned that the architecture would make it easier to role the results of defect tracking into a larger knowledge base, allowing engineers to research issues they’re having by referring to a collection of previously observed symptoms. It occurs to me now that what I was describing was the beginning of a merger between defect tracking tools and expert systems.

Is this done anywhere? There are lots of expert systems used for diagnostics and there are lots of bug trackers, but are there systems that combine features of the two? The more I think about it, the more it seems like this is the only reasonable way to practice software engineering.

One way to approach the issue to integrate a defect tracker and an expert system database as two distinct but closely coupled tools. If I were using the architecture I described earlier, I would start by going to my expert system first. I would investigate my symptoms and determine whether or not there’s already a solution for my problem. That is, maybe my symptoms do not represent a software defect at all. Rather, diagnostics reveal a fault in hardware or installation parameters. If I don’t find a known solution, then the process of exercising the expert system has got me started on the path of breaking down my problem into well defined pieces. This will help me to design tests for further investigation and it will provide the basis for a new addition to the expert knowledge base once I’ve diagnosed my bug the hard way.

Upon exiting the expert system without a solution, I would turn to the bug tracker to document what I know. Maybe when I started I thought I had a single symptom, but my tour though the knowledgebase gave me a new perspective and I create multiple symptoms. This will make it easier to migrate the information back into the knowledgebase when I’ve fixed the bug, and I can still link all of these symptoms to a single task. Remember, the primary goal of a defect tracker is to allow me to manage my work.

To make life easier, maybe my defect tracker has a means of storing symptom data as expert system entities. That I can move back and forth between the two tools in native format rather than, say, cutting and pasting text between them. That doesn’t mean I should shy away from free form text. My defect tracker should have room for this too, since in the early stages of problem investigation I might want to brain dump a lot of random thoughts and observations. The same can be said for the objects storing the causes.

What I’ve constructed here is a trio of inter-related tools: a defect tracker, an expert system knowledgebase and an electronic lab notebook. All three share a two-way relationship with the other two. Clearly, the tracker is closely related to the knowledgebase, but test results in the notebook may be merged into the knowledge base two without necessary going through the tracker first. After all, you may stumble upon some properties of the system that don’t contribute much to your particular investigation but which are not currently represented in the knowledgebase and might be of use in the future. Likewise, the knowledge base could aid in interpreting test results and iterating over test designs throughout the investigation process.

It occurs to me to ask the question: are these really three separate tools, or three views on the same model? I think ideally they are the latter. Practical concerns may causes us to deploy our environment with separate tools because we might be able to cobble them together from existing software. But I could imagine a single vendor building a defect tracker and lab notebook interface on top of an expert system which actually manages everything.

How About Defect Tracking With Expert Systems?

20 07 2010Comments : 1 Comment »

Tags: Defect Tracking, Electronic Lab Notebook, Expert Systems

Categories : Uncategorized

How To Make Defect Trackers Better

15 07 2010In a previous post, I discussed how the defect tracking tool with which I’m familiar don’t work well with approaching problems scientifically. A part of the problem is organization for test results, which I may deal with in another entry, but I think the larger issue is that the terms “defect” or “bug” confound several separate concepts. I don’t know if there are any defect tracking tools out there which handle these ideas appropriately, but I will detail my own approach to the problem. If anyone knows of a good tool which does likewise or does it better, then I’d be happy to hear about it.

The first objective is to define three independent terms: symptoms, causes and tasks. A symptom is some output or aggregation of outputs from your software. Outputs in this case may include crashes and other side effects from running the software that are not included in the intended vector of output (e.g. stream I/O or GUI). These are what most people would call bugs. A cause is the root cause of a symptom, such as a coding error or an incorrect parameter. A cause may result in more than one symptom, and multiple causes may have the same symptom. A task is something on your to-do list. Most tasks probably begin life as an investigation into the cause of a particular symptom. As tasks mature, they became well defined prescriptions for repairing a known cause.

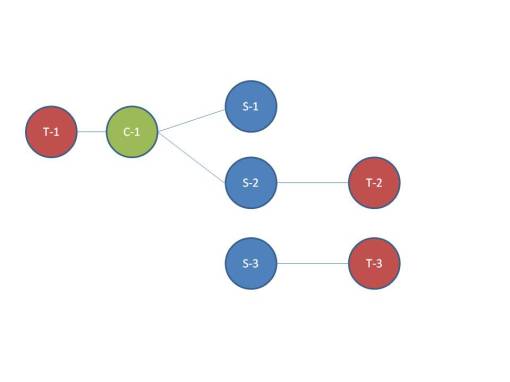

Consider the following graph:

The “S” terms represent symptoms and the “T” represent tasks. We don’t don’t know why whatever’s happening is happening yet, so there are no causes. Since we also don’t know how any of the symptoms might be related, we create a separate task to investigate each. After some investigation, I discover a cause that explains some of my symptoms, and my graph looks like this:

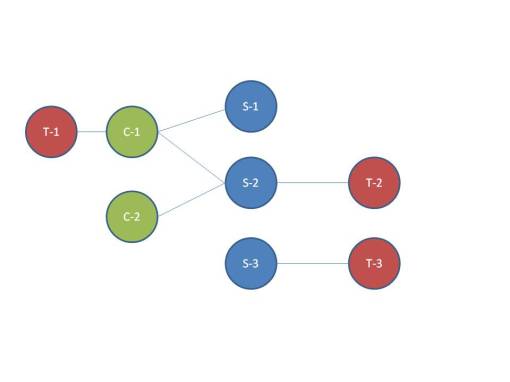

Notice that I re-linked T-1 so that it refers to the cause rather than the symptoms. I could’ve handled this in other ways. For example, I could’ve linked T-1 to each of the symptoms and if I wanted to trace T-1 back to the root cause I could’ve done so via the link between the symptoms and the causes. However, it seems more appropriate that once my task has changed from an investigation to a repair job, it should be linked directly to the problem which it is repairing. Reasons for this should become apparent later, beginning with what we see in the next graph:

There’s no reason why multiple causes can’t have the same symptom. I might repair C-1 and still see S-2 pop up from time to time. Therefore I still need a task which to investigate this symptom. I suppose there’s nothing wrong with allowing both T-1 and T-2 to link to S-2, but it’s cleaner to link repair tasks directly to causes and investigation tasks directly to symptoms. This will probably make my search queries a lot easier two, especially if I tend to automate them to produce reports.

Note that it’s possible that when I first find C-1, I withdraw T-2 from my task queue because I think I’m going to kill two birds with one stone and I don’t need another task. After all, I don’t know that there’s another cause for S-2 until I fix C-1 and see that S-2 still happens. That’s okay though. I can just create a new task if I have to.

With a traditional defect tracker, which places symptoms, tasks and causes all in one entry, doing this may have been trickier. For example, maybe I’d just withdraw (or worse, delete) T-2 and then when I found that S-2 was still around, I’d either have to go find T-2 in my database again (a difficult task given the poor search function in many trackers) or I’d have to create a new task and copy over all the relevant data from T-1. Of course, when I first discovered C-1, I would’ve copied the data from T-2 over to T-1 before withdrawing T-2. Or, maybe I would’ve just linked T-1 to T-2 when I discovered C-1, leaving me again with the job of creating a copy of T-2 to track S-2 back to C-2 independently from all the stuff that’s in T-1 and its data. This is all very messy. It wastes the time of engineers and it makes following a chain of events or searching defect history all the more complicated. These problems are solved by doing something very simple, which is to separate symptoms, causes and tasks into separate objects.

I can further ease my bug fixing pain by marking my symptoms with red/yellow/green indicators of some sort. A red symptom is one with no known cause. A yellow symptom is one with a known cause but for which that cause has not been fixed or for which we have insufficient test data to convince ourselves that the problem has really been resolved. A green symptom is one that no longer occurs in the system. Of course, there’s always the problem of proving the negative. I know we can never color a symptom green if we want perfection, but nobody’s perfect. We all have some threshold marking the point at which we’re willing to call a problem solved. This color coding allows us to easily see the status of our defect tracking efforts. I can withdraw T-2 and unlinking from S-2 if I want. If solving C-1 doesn’t make S-2 go away, I don’t have to go searching for S-2 because it’s probably still on my list of yellow symptoms and is easy to find. Otherwise, no sweat. It’s easier to write search tools for symptoms without all the extra chatter from causes and test data, so I should be able to find it if I really have to.

There’s one further complexity that comes to mind. While causes have precise definitions, symptoms often don’t. Since I don’t know what conditions might be relevant to a symptom (if I did, I’d know the cause!), I’m probably only going to record the most complete description of the problem I have at the time. I’m bound to leave out some details. What happens when I think that S-2 is happening again and upon further investigation I discover that it’s really a slight variation on S-2? It’s possible that a variation on S-2 has a completely separate cause. You’d hope not, since good software design should separate control structures enough that similar symptoms are related to a single locus of control, but then again the more poorly design the system, the more you need a sophisticated defect tracker to help you dig yourself out of the hole you’re in.

There are other variations on this theme too. For example, the new not-quite-S-2 –symptom may very well have a cause that’s closely related to C-1, but because of your particular project’s process cycle you need to open a new issue. You could always create a new task for C-1 and fill out some more data explaining the broader problem, but there’s probably nothing wrong with creating a new cause and linking it to the new symptom with a new tasks. You could always refer back to C-1 if you wanted, and one could imagine an even more complex approach where causes are grouped into families. But why go there if this approach gives us what we need?

Whatever the case, we’re faced with the prospect of merging and splitting symptoms. We may decide that one symptom has two variations or that two symptoms may need to be merged. Merging symptoms is easy. It’s always easy to simplify. All causes and tasks linked to that symptom can be automatically linked by the tool to the new merged symptom. Splitting them may be more complex. If I already have a structure built up around a symptom, I may have to manually iterate through each object that’s linked to it and decide what stays linked to the old symptom and what should be moved to the new one. In most cases this should be simple. There should be one tasks and at most a couple causes. There’s probably no good way to automate this. It takes human intelligence to notice the split in the first place, and it takes further intelligence to figure out what that means for the data you’ve already built up. Figuring out how to re-organize the data is what engineers are paid for. The tool is there to make things easier.

Something else that this structure makes easier is organizing test data. The test data will likely be stored in some repository outside this tool. When I’m investigating a symptom, I may run lots of tests and store them in my scientific notebook. I can link these tests to my task, since that’s really what the task stands for. The data moves with the task when it becomes a repair task and I can append to it with test data that’s intended to prove that the problem no longer exists.

Note that I can use this system for more than defect tracking. I can also use it build up a “help” database. Many times, what uses interpret as a defect is just a misunderstanding of how the software is supposed to work. By users, I generally mean integrators, since this tool is a development tool and not something for the end user. I can now create symptoms and causes, linking them with any complexity I want, without creating tasks (because there’s nothing for me to fix). With a front end search tool, a user can search for the symptoms she’s seeing and find out if it’s just an input problem or if it’s a known bug.

Comments : 1 Comment »

Tags: Defect Tracking, Software Architecture

Categories : Uncategorized

Recent Comments